Last updated: June 2, 2023

After implementing the first iterations of my portfolio's front-end App and back-end Service, I packaged them up into Docker images and was running them together locally using Docker Compose.

Once I was happy with the initial look, feel, and functionality of the portfolio, I wanted to be able to deploy it to a production environment. Running the portfolio from one of my local machines was not feasible, I didn't want to buy and put together a Raspberry PI, nor did I want to incur the cost of my electricity bill, nor want to deal with the security aspects of hosting such infrastructure myself.

I initially thought about hosting on the Google Cloud Platform, but the pricing for its offerings that would apply to my use case (Kubernetes Engine, Compute Engine) was cost-prohibitive. For the traffic I was expecting to receive, I wouldn't need heavy horizontal or vertical scaling. I just needed to run the portfolio images, and a few other utility images like an NGINX reverse proxy and a Lets Encrypt SSL Companion to prepare and keep up-to-date certificates to allow for HTTP traffic. so really I needed a small bare metal machine. Looking around it made sense to use Vultr, they offered a small secure VM for around £5 a month with no bells or whistles.

Once I had set up a Vultr compute instance, I was building and pushed portfolio Docker images myself, and deployed them with some bash scripts manually. This meant running a build script on my local machine, and then logging into the Vultr instance and running a deployment script there. It was cumbersome and time-consuming, and the way I was managing my content at the time meant it needed to be done every time a text change was made.

I needed a way to leverage the GitHub repository I was using to house the code, I looked at hosting my own Jenkins image in production, to watch my Git repository and build new versions of portfolio images, but this was again managing my infrastructure which comes with the overhead of making sure it's running, up to date and secure. Throughout my career, I have had a bunch of experience with Azure DevOps and CircleCI, and for this use case, the simplicity of CircleCI won out. It inter-opped with GitHub easily, I could auth in with GitHub, and it was purely configuration as code, so I could design and build pipelines that I could easily version control and maintain.

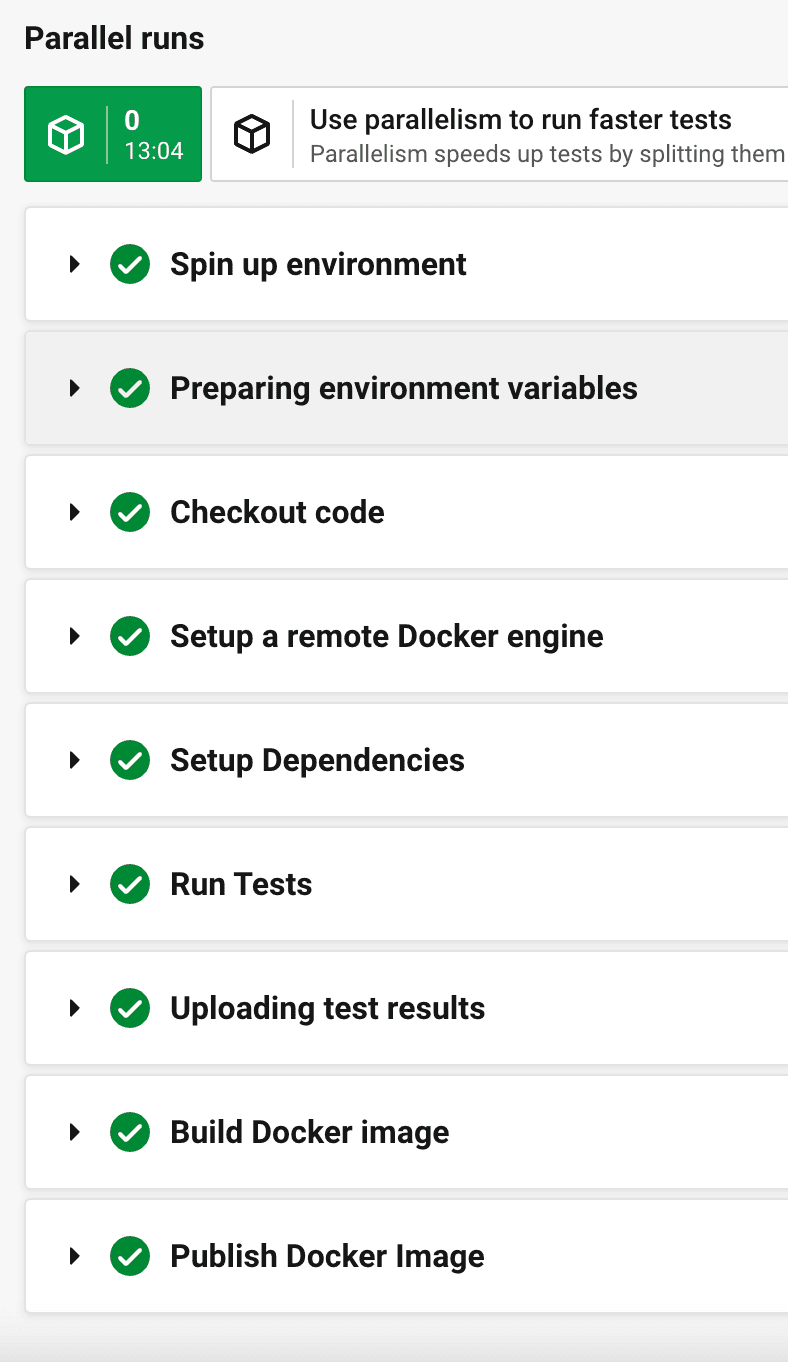

I put together a simple CircleCI pipeline with 2 jobs, one to build and push the portfolio front-end Docker image and another to build and push the back-end service. The initial pipeline built both images every time any file changed within the repo. This was very time-consuming and meant that the smallest change causes a whole lot of processing was run unnecessarily.

Below is the configuration of the front-end build job:

1 test-build-push-portfolio:

2 docker:

3 - image: cimg/node:14.16.0

4 steps:

5 - checkout

6 - setup_remote_docker:

7 version: 19.03.13 # https://support.circleci.com/hc/en-us/articles/360050934711-Docker-build-fails-with-EPERM-operation-not-permitted-copyfile-when-using-node-14-9-0-or-later-

8 - run:

9 name: Run tests

10 command: |

11 cd portfolio

12 npm install

13 npm run test -- --watchAll=false --passWithNoTests

14 npm run build

15 - store_test_results:

16 path: ./portfolio

17 - run:

18 name: Build Docker image

19 command: |

20 cd portfolio

21 docker build -t $DOCKER_REGISTRY/$IMAGE_NAME_PORTFOLIO:latest --build-arg BUILD_MODE=":prod" .

22 - run:

23 name: Publish Docker Image

24 command: |

25 echo "$DOCKERHUB_PASS" | docker login -u "$DOCKER_USER" --password-stdin

26 docker push $DOCKER_REGISTRY/$IMAGE_NAME_PORTFOLIO:latestThe Portfolio's Docker images were now being built and pushed to the container registry automatically upon commit, great! But I still had to SSH into my Vultr instance and run the redeployment scripts. I had essentially only swapped one command for another, instead of building the images locally, I was committing changes to GitHub and having CircleCI build the images for me.

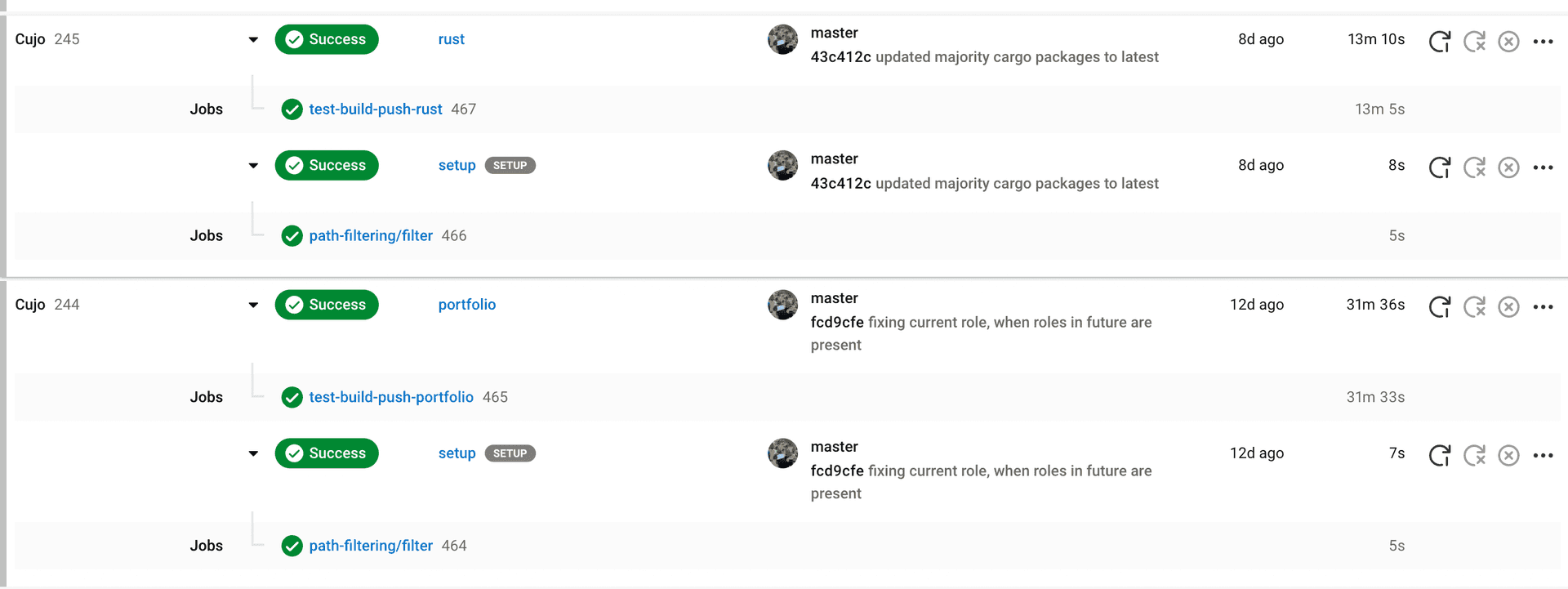

Since a single change to a file would trigger the rebuilding of everything, I looked into how to run CircleCI jobs based on which part of the portfolio had been changed. Reading up on CircleCI Dynamic pipelines I came across their Path Filtering orb, and through vaguely following this tutorial, I was able to put together a pipeline that triggers the job to build the front-end app or the back-end service if files within those sub-projects are modified, all other files within the repo are essentially ignored. The latest version of the pipeline configuration can be seen here.

Now my game plan was to automate the manual deployment step on the prod machine. I had a script that would teardown and deploy the Docker image I wanted to redeploy, and I wanted to essentially automate that.

The main artefact being deployed is a Docker image, and these images are stored in a Dockerhub registry, I needed a way to know when one of my portfolio images was updated and then run my redeployment script. Since I was using Docker Compose I wanted to see if I could leverage a container to be able to handle redeployment for me, much in the same way I am using a reverse proxy container to route calls to my portfolio containers.

I managed to run across the following tutorial for a project called Watchtower, a Docker container that watches updates to images sitting on its network and redeploys with the same configuration.

1version: "3.6"

2services:

3 watchtower:

4 image: containrrr/watchtower

5 container_name: watchtower

6 environment:

7 - WATCHTOWER_POLL_INTERVAL=300

8 volumes:

9 - /var/run/docker.sock:/var/run/docker.sock

10 restart: always

11 networks:

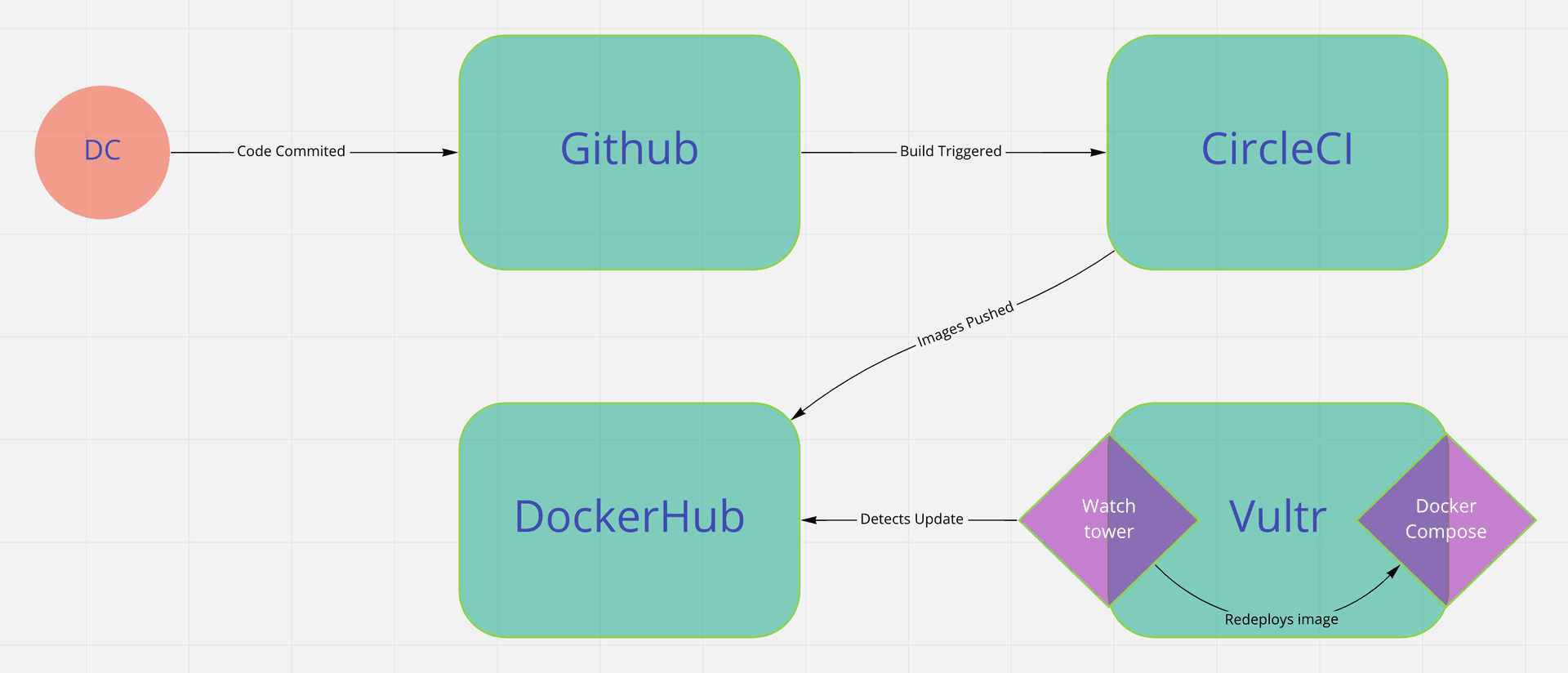

12 - cujoAdding this single container to the stack, was a super simple quick win that automated away the manual redeployment step, meaning that I could simply commit new code to master, and its effects would be carried to prod through this ramshackle pipeline:

One minor issue I have found is that Watchtower does not clean up any old Docker image layers, as it pulls the latest from the container registry, which leads to the compute instance's local storage filling up with old data, and requires manual clean-up from time to time. This is something that should be focused on and automated away.

- The code is committed to Github.

- Circle CI runs a job based on which project is modified.

- The CircleCI Job pushes the built Docker image to Dockerhub.

- The Watchtower image deployed in the Docker Compose production stack detects a new image version.

- Watchtower redeploys the new image.