Last updated: June 2, 2023

After failing to win over a company during a system design interview, I felt it was necessary to brush up on the system design skills necessary for a product / senior engineer. The feedback I received told me that I do not express confidence in owning my design. While I could articulate the pros, and cons and understand the trade-offs of particular technologies and architectures, I was not able to say for sure that it was best for the functionality. Perhaps it was a lack of experience with this kind of interview, or I saw it as a collaborative session, where we discussed the journey to fleshing out a system design, in any case, I feel that it means that I need to lift my eyes above the code itself and think more about the technical landscape the code resides in.

To gain more experience I turned to my portfolio, which is built up of a small constellation of docker containers; an app, a service, and the Contentful CMS, built on CircleCI, monitored and deployed using Watchtower. it's a small-scale system with not much in the way of scalability, but for the scant number of users I see trickle in to view my portfolio, it's enough for now.

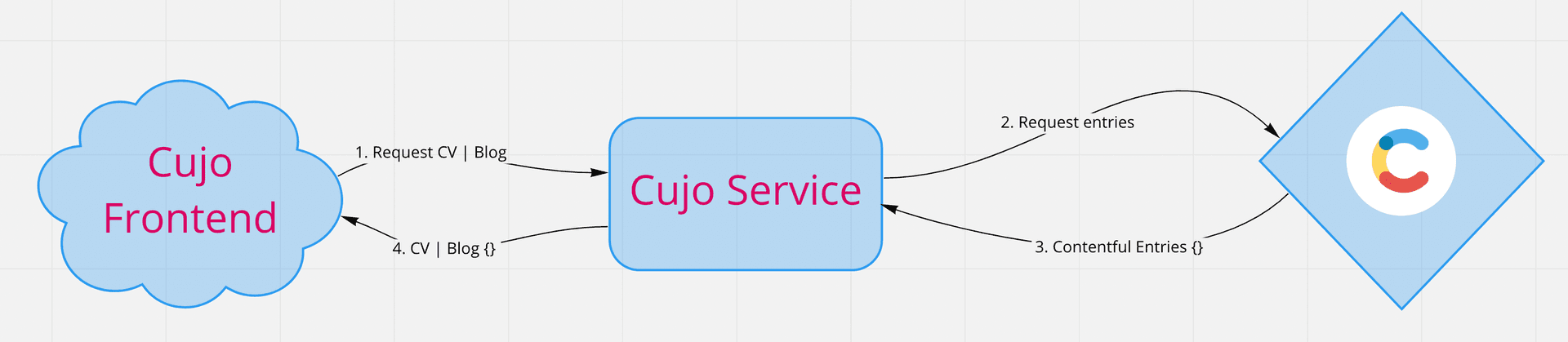

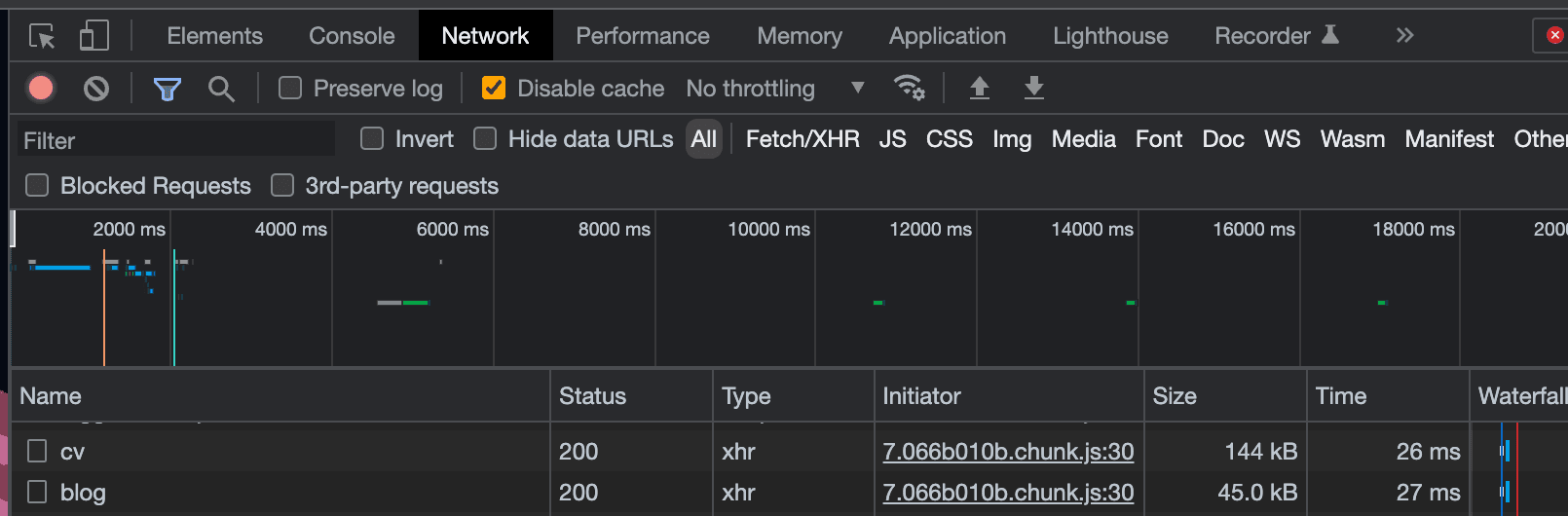

While thinking about how async distributed systems, I could see there were a few glaring issues in the current architecture. I could see that the top-down process used to transport data from the Contentful CMS up to the front end is quite inefficient. It's all user-driven, the user accesses the front end, which makes two calls to my backend concurrently, which in turn makes 5 calls to Contentful to collect the portfolio content and another call to gather the blog posts. This seems all well and good, and can generally get the data to the user within a second or so, but when you consider that potentially there could be hundreds of users accessing my portfolio, the number of network calls can stack up considerably. Degrading performance and potentially damaging first impressions with potential employers.

It would make sense that the collection of the latest content from Contentful should be triggered from the CMS side instead of by the user. The backend service should maintain a cache of the latest content, ready to be served up instantly to visitors to my portfolio front end, without having to make calls to external entities while the user is waiting in line.

This idea, in theory, should improve frontend performance as content is instantly returned, instead of doing a lot of processing to get fresh data to the user.

- Does Contentful provide a way to emit events when new data is published / unpublished at least?

- How are we going to consume that event, via REST, PubSub?

- We don't want just anyone to be able to invoke these cache regeneration endpoints, we should be authenticating these clients.

- Does it make sense for now to hold the cache in memory or do we need to use something like Redis to hold the data for us?

As it turns out Contentful does have quite powerful webhook functionality, where Contentful can issue outbound REST requests for particular content types and almost all of their content state transitions, for me the most important ones are published and unpublish. You can also customise the webhook endpoint URL, the request method, payload, and request headers, allowing you to craft requests tailored to your API and its requirements with ease. One downside is that they don't offer a playground or a test bench to allow you to test these webhooks, it's just a case of setting it up and seeing if it flies.

I wanted to be able to authenticate incoming webhook requests so that I could be sure that some bad actor somewhere can't sabotage my new backend system. I also didn't want to hand-roll my authorization mechanism, as to me that would be reinventing a wheel of poorer quality than a battle-tested offering could be used instead.

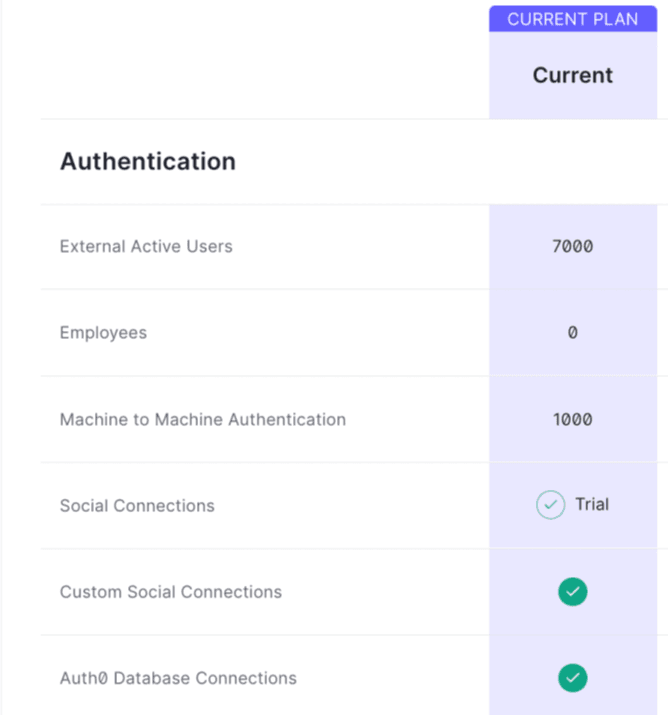

Having a look around the different AaaS (Authentication as a Service) offerings, I could see that Auth0 was a good fit, they have a generous free tier offering, 1000 machine-to-machine authentications per month which would more than cover the <25 webhook calls that would potentially be made per month.

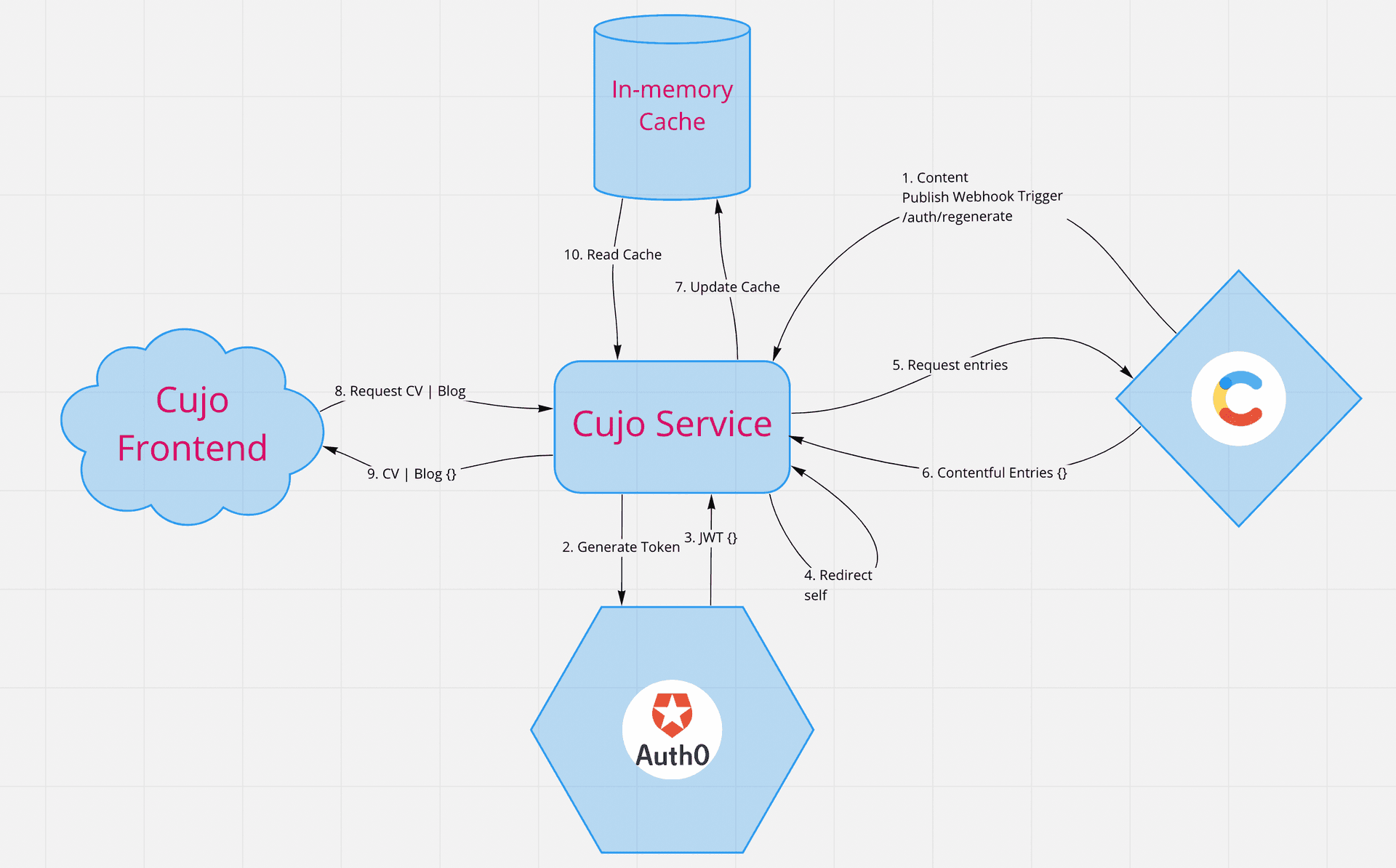

Just delving into implementation details for a moment, I opted to have the webhook pass the client credentials to my backend service, which would be used to authenticate with Auth0, if successful and with the token in hand the service would redirect back to itself and validate the token almost as a form of 2FA, and as a measure to simplify dealing with Contentful's webhooks directly, as even though Contentful have a webhook API this would require extra work persisting JWT tokens, it made sense to keep things simple without it.

I opted to implement a simple in-memory cache it couldn't even be called a true cache at all. When digging into the actual implementation, it's just a Rust Struct wrapped in a Mutex:

#[derive(Debug, Clone)]

pub struct Cache {

pub cv: String,

pub blog: String,

}

let cache = Data::new(Mutex::new(Cache {}));

Since the data isn't time sensitive and doesn't grow over time, this is adequate to store a snapshot of the portfolio and blog. A bonus is that since we're using a struct we get O(1) access to the data. I considered a HashMap but since we always know we are keying on cv and blog a struct is the best way to go.

Caching the content as strings removes the need to serialise the content when a user requests it, shaving off 10 - 20ms of mainline processing on average. When the Contentful web hook fires only then is the content serialised.

With CMS webhooks, client authentication and caching are all in place. This design triggers a cache regeneration only when data changes in Contentful. The data is always up to date and ready and waiting to be served to the front end whenever it is accessed. The cache regeneration endpoints are safe and secure from bad actors.

With this all in place and running in production we can see a minimum 10x front-end performance improvement, with backend requests now responding within 9 - 20ms consistently, down from 400ms - 700ms per cold non-browser cached request and 150 - 350ms per warm browser cached request.

The below design outlines the new content update flow. The system is now more autonomous and data-driven instead of user-driven. The number of requests to Contentful is reduced to 5 per CV update and 1 per Blog update, instead of 8 per front-end page load.